Sink Resources configuration in TKGI with self signed certificates

+++ author = "Shubham Sharma" title = "Sink Resources configuration in TKGI with self signed certificates" menuTitle = "Sink Resources configuration in TKGI" date = "2021-03-13" description = "How to configure Sink Resources in Tanzu Kubernetes Grid Integrated Edition with self signed certificates." featured = false series = ["TKGI"] aliases = [ "/tags/PKS/", "/tags/TKGI/", "/tags/Kubernetes/", "/tags/Certificates/", "/tags/Sink/", "/post/tkgi-sink-configuration", "/tanzu/tkgi/tkgi-sink-configuration" ] +++

In Tanzu Kubernetes Grid Integrated Edition(TKGI), Sinks collect logs and metrics about Kubernetes worker nodes in your TKGI deployment and workloads that are running on them. To get more information about Sinks and their architecture please refer to the official documentation. In this post we will setting up a syslog server using docker and configuring TKGI sinks to use it.

Pre-requisites¶

- An existing TKGI cluster

- Docker installed on the machine that will act as the Syslog server

- Network connectivity between Syslog server and TKGI cluster

Generating self-signed certificate¶

This step will generate two files: cert.pem and key.pem. You'll be asked to enter some information after running this command. Since this is a self-signed cert the cert.pem will act as both CA and server certificate

Syslog Server Configuration¶

Expand the code snippet below and save the below configuration file as syslog-ng.conf

@version: 3.29

@include "scl.conf"

source s_local {

internal();

};

source s_network {

default-network-drivers(

# NOTE: TLS support

#

# the default-network-drivers() source driver opens the TLS

# enabled ports as well, however without an actual key/cert

# pair they will not operate and syslog-ng would display a

# warning at startup.

tls(key-file("/etc/syslog-ng/key.pem") cert-file("/etc/syslog-ng/cert.pem") peer-verify(optional-untrusted))

);

};

destination d_local {

file("/var/log/messages");

file("/var/log/messages-kv.log" template("$ISODATE $HOST $(format-welf --scope all-nv-pairs)\n") frac-digits(3));

};

log {

source(s_local);

source(s_network);

destination(d_local);

};

Running syslog server using Docker¶

The machine running the syslog docker container should be reachable from the TKGI worker nodes.

docker run --name syslog_server -p 514:514/udp -p 601:601 -p 6514:6514 -it -d \

-v "$PWD/syslog-ng.conf":/etc/syslog-ng/syslog-ng.conf \

-v "$PWD/key.pem":/etc/syslog-ng/key.pem \

-v "$PWD/cert.pem":/etc/syslog-ng/cert.pem balabit/syslog-ng:latest

Once the syslog server is up, the logs can me monitored by using the below command

Before we dive into the actual configuration, let's deploy an application in the TKGI kubernetes cluster that will spew logs. Later we will confirm these logs are visible in the syslog server logs

TKGI Sink Configuraiton¶

We will be covering two ways that sink resources can be configured:

- TLS enabled on Syslog server and Sink is configured with

insecure_skip_verify=true - TLS enabled on Syslog server and

insecure_skip_verifycan not be used in Sink configuration

TLS enabled on Syslog server and Sink is configured with insecure_skip_verify set to true¶

Create and apply Sink Resource¶

apiVersion: pksapi.io/v1beta1

kind: LogSink

metadata:

name: tlslogsink

namespace: default

spec:

type: syslog

host: opsmgr.outofmemory.com

port: 6514

enable_tls: true

insecure_skip_verify: true

Once the below command is executed wait for fluent-bit pods to restart

To generate logs you can run a scale operation on a test deployment. This should generate enough logs to verify syslog connectivity

To verify logs are received on the syslog server run the following

docker exec -ti syslog_server tail -f /var/log/messages

Mar 13 19:53:44 ebe72cb3e20a syslog-ng[1]: Syslog connection accepted; fd='20', client='AF_INET(10.10.10.72:37704)', local='AF_INET(0.0.0.0:6514)'

Mar 13 19:53:43 10.10.10.72 k8s.event/default/loggera/: Scaled up replica set loggera-794cd77b84 to 20

Mar 13 19:53:43 10.10.10.72 k8s.event/default/loggera-794cd77b84/: Created pod: loggera-794cd77b84-jnt87

Mar 13 19:53:44 10.10.10.72 k8s.event/default/loggera-794cd77b84/: Created pod: loggera-794cd77b84-5nflk

Mar 13 19:53:44 10.10.10.72 k8s.event/default/loggera-794cd77b84-jnt87/: Successfully assigned default/loggera-794cd77b84-jnt87 to 8d092150-f0be-48c7-b30b-5d91738eafef

Mar 13 19:53:44 10.10.10.72 k8s.event/default/loggera-794cd77b84-58cz2/: Successfully assigned default/loggera-794cd77b84-58cz2 to 8d092150-f0be-48c7-b30b-5d91738eafef

Mar 13 19:53:44 10.10.10.72 k8s.event/default/loggera-794cd77b84-5nflk/: Successfully assigned default/loggera-794cd77b84-5nflk to 8d092150-f0be-48c7-b30b-5d91738eafef

TLS enabled on syslog server and insecure_skip_verify can not be used in Sink configuration¶

This scenario is applicable when we would like to either trust an internal CA or use a self-signed certificate. This can be done by creating a configmap that holds the self-signed certificate. Once the config map is created we can mount it into the fluent-bit pod to successfully establish communication with syslog server.

Create and apply sink resource¶

apiVersion: pksapi.io/v1beta1

kind: LogSink

metadata:

name: tlslogsink

namespace: default

spec:

type: syslog

host: opsmgr.outofmemory.com

port: 6514

enable_tls: true

Create Configmap to store the CA certificate¶

kubectl -n pks-system create configmap ca-pemstore --from-file=cert.pem

kubectl -n pks-system describe configmap ca-pemstore

Name: ca-pemstore

Namespace: pks-system

Data

====

cert.pem:

----

-----BEGIN CERTIFICATE-----

MIIF1TCCA72gAwIBAgIJAM+vjwVoWDilMA0GCSqGSIb3DQEBCwUAMIGAMQswCQYD

-----END CERTIFICATE-----

fluent-bit daemonset to mount the configmap into the trusted certificate store.

{

"spec":{

"template":{

"spec":{

"containers":[

{

"name":"fluent-bit",

"volumeMounts":[

{

"mountPath":"/etc/ssl/certs/cert.pem",

"subPath":"cert.pem",

"name":"ca-pemstore"

}

]

}

],

"volumes":[

{

"name":"ca-pemstore",

"configMap":{

"name":"ca-pemstore",

"defaultMode":420

}

}

]

}

}

}

}

We can patch the fluent-bit daemonset Using

To generate logs you can run a scale operation on a test deployment. This should generate enough logs to verify syslog connectivity

To verify logs are received on the syslog server run the following

docker exec -ti syslog_server tail -f /var/log/messages

Mar 13 20:18:02 ebe72cb3e20a syslog-ng[1]: Syslog connection accepted; fd='20', client='AF_INET(10.10.10.72:39548)', local='AF_INET(0.0.0.0:6514)'

Mar 13 20:18:01 10.10.10.72 k8s.event/default/loggera/: Scaled down replica set loggera-794cd77b84 to 1

Mar 13 20:18:01 10.10.10.72 k8s.event/default/loggera-794cd77b84-xqp7n/: Stopping container random-logger

Mar 13 20:18:01 10.10.10.72 k8s.event/default/loggera-794cd77b84-jb5rw/: Stopping container random-logger

Mar 13 20:18:01 10.10.10.72 k8s.event/default/loggera-794cd77b84-tnxkt/: Stopping container random-logger

Mar 13 20:18:01 10.10.10.72 k8s.event/default/loggera-794cd77b84-hfvdc/: Stopping container random-logger

Mar 13 20:18:03 10.213.45.78 pod.log/default/loggera-57b85ddbf4-cvnz6/random-: 2021-03-13T20:18:03+0000 WARN A warning that should be ignored is usually at this level and should be actionable.

Mar 13 20:18:05 10.213.45.78 pod.log/default/loggera-57b85ddbf4-cvnz6/random-: 2021-03-13T20:18:05+0000 INFO This is less important than debug log and is often used to provide context in the current task.

Mar 13 20:18:08 10.213.45.78 pod.log/default/loggera-57b85ddbf4-cvnz6/random-: 2021-03-13T20:18:08+0000 INFO This is less important than debug log and is often used to provide context in the current task.

Mar 13 20:18:11 10.213.45.78 pod.log/default/loggera-57b85ddbf4-cvnz6/random-: 2021-03-13T20:18:11+0000 WARN A warning that should be ignored is usually at this level and should be actionable.

Note: The caveat with this approach is that the changes are not persistent post TKGI upgrade. This happens because during upgrade TKGI runs a bosh errand apply-addon. This addon syncs back the state of addons like coredns, fluent-bit etc. to the default state.

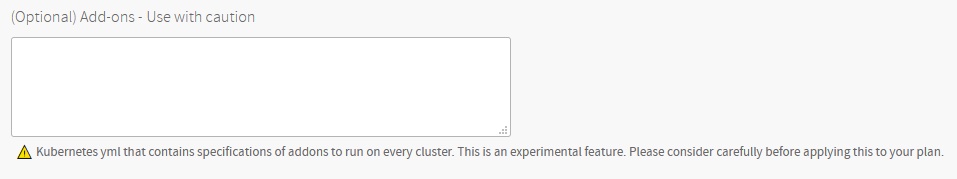

A workaround to this problem can be exporting the fluent-bit DaemonSet definition after applying the patch and the configmap we created earlier. After export we can add these to the add-ons section of plan under which your cluster is created.